In 2019, I started with my first contribution to WebRTC. This was all about screen sharing support on Linux Wayland sessions, using xdg-desktop-portal and PipeWire. Back then, it was quite simple, we only had PipeWire 0.2 and all portal backends supported only screen sharing (no window sharing). While this was relatively easy, it was not ideal as each screen sharing request involved two portal dialogs to get the screen content on the web page itself. For me it was a big success, because I made quite a significant contribution to such a big project, which is used by many people, and a project which is used by all modern web browsers.

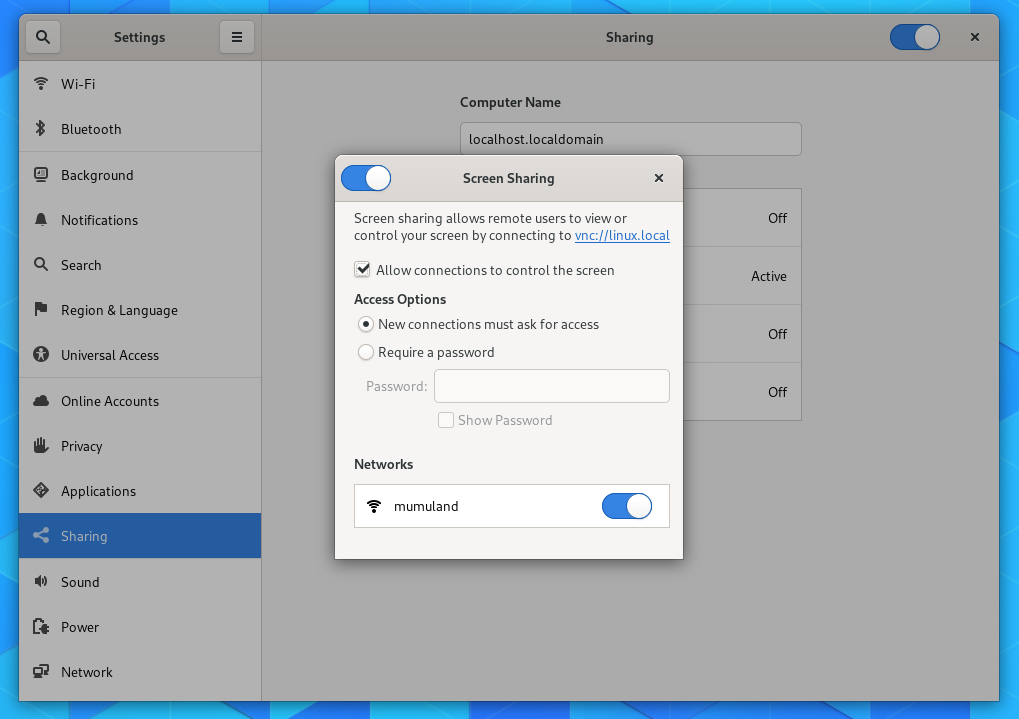

At the beginning of 2020, the year everyone would like to erase from their memories, we got PipeWire 0.3 (with slightly different API) and later with xdg-desktop-portal-gtk and xdg-desktop-portal-kde (later this year) people were finally able to share application windows. Support for all of this was lacking in WebRTC, because back then those were not available. I wanted to tackle all issues at once, bring support for window sharing and get rid of the “dialog hell” with portals, which was even worse with the new window sharing capabilities in portal backends.

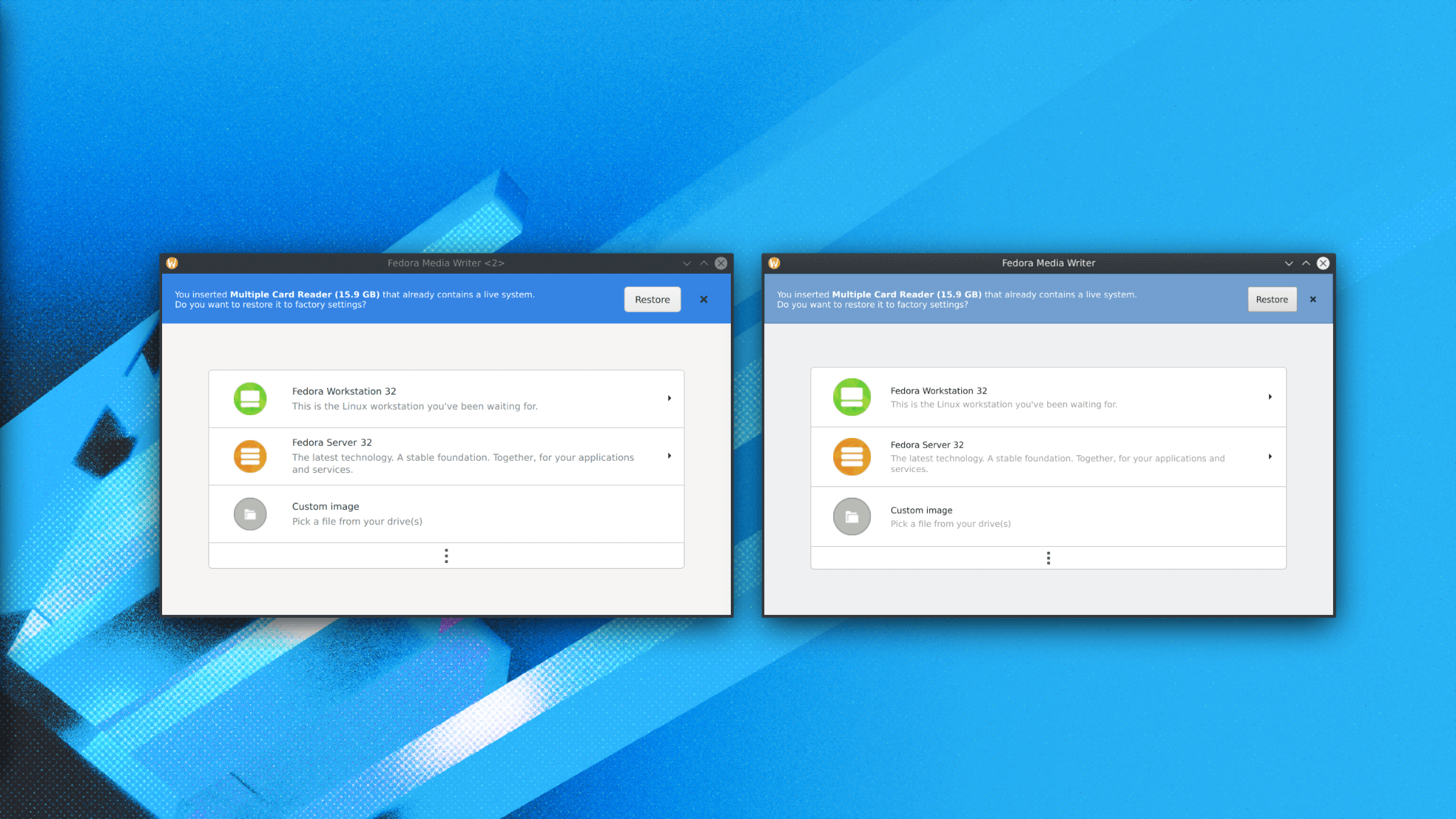

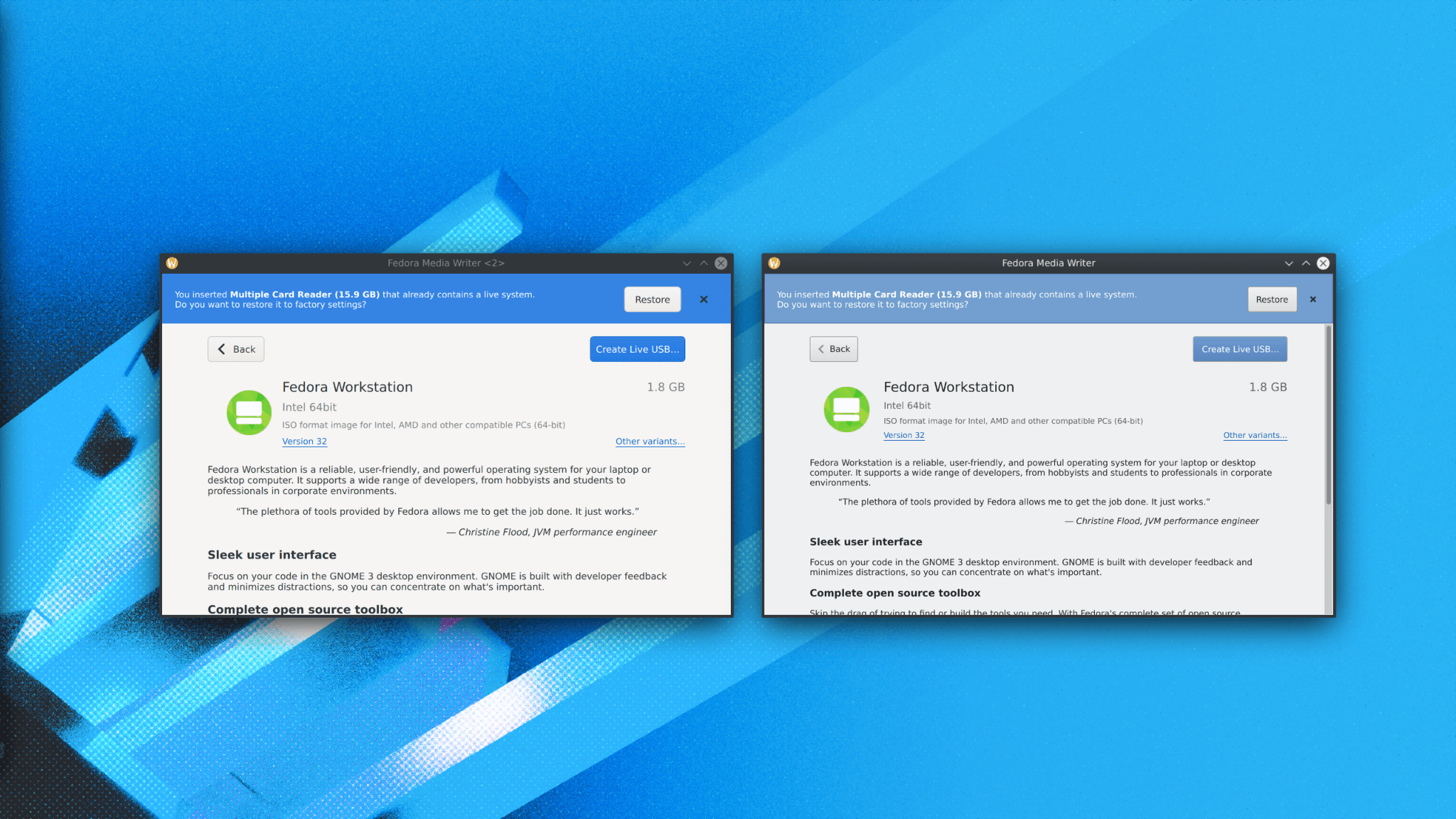

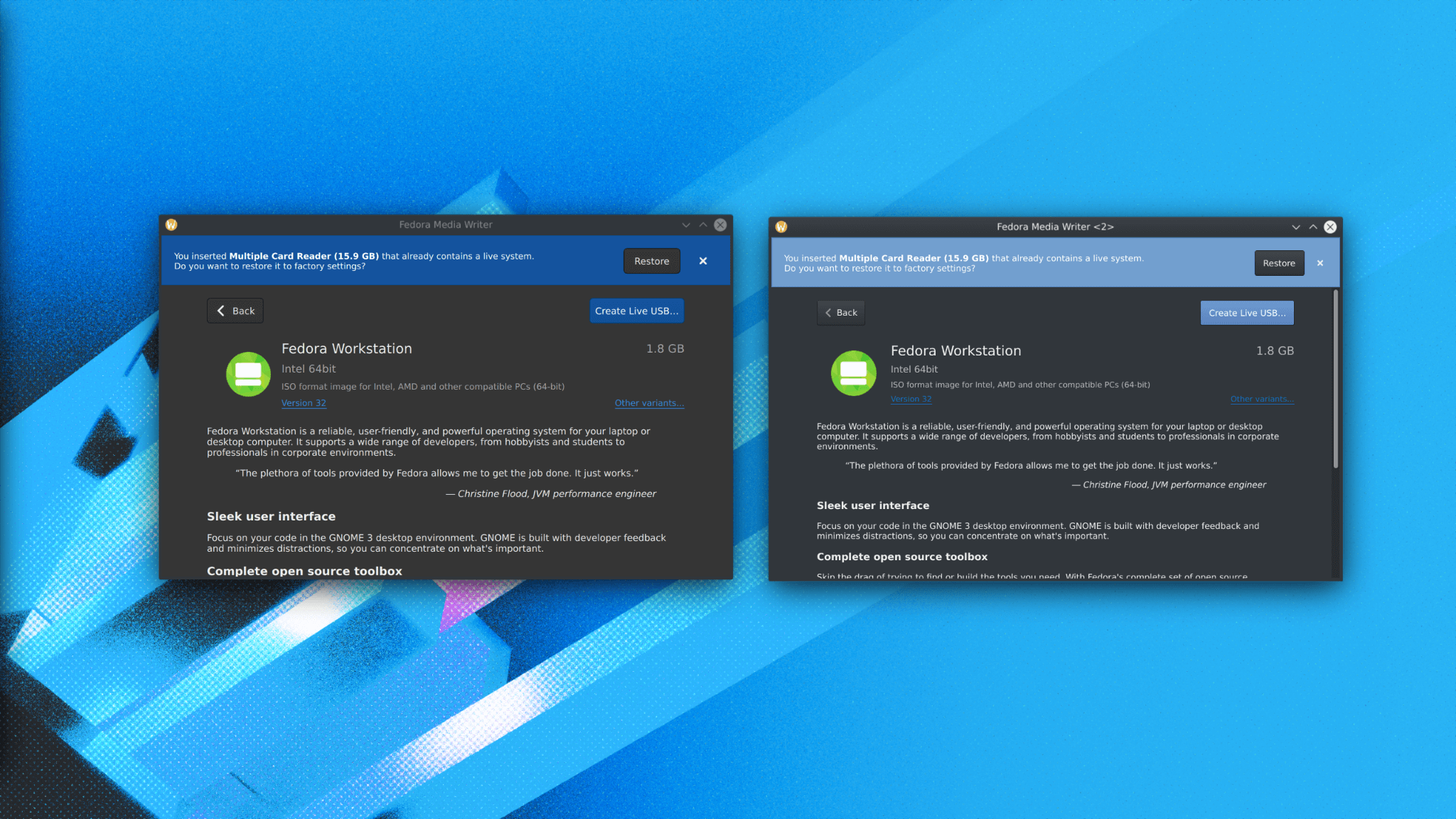

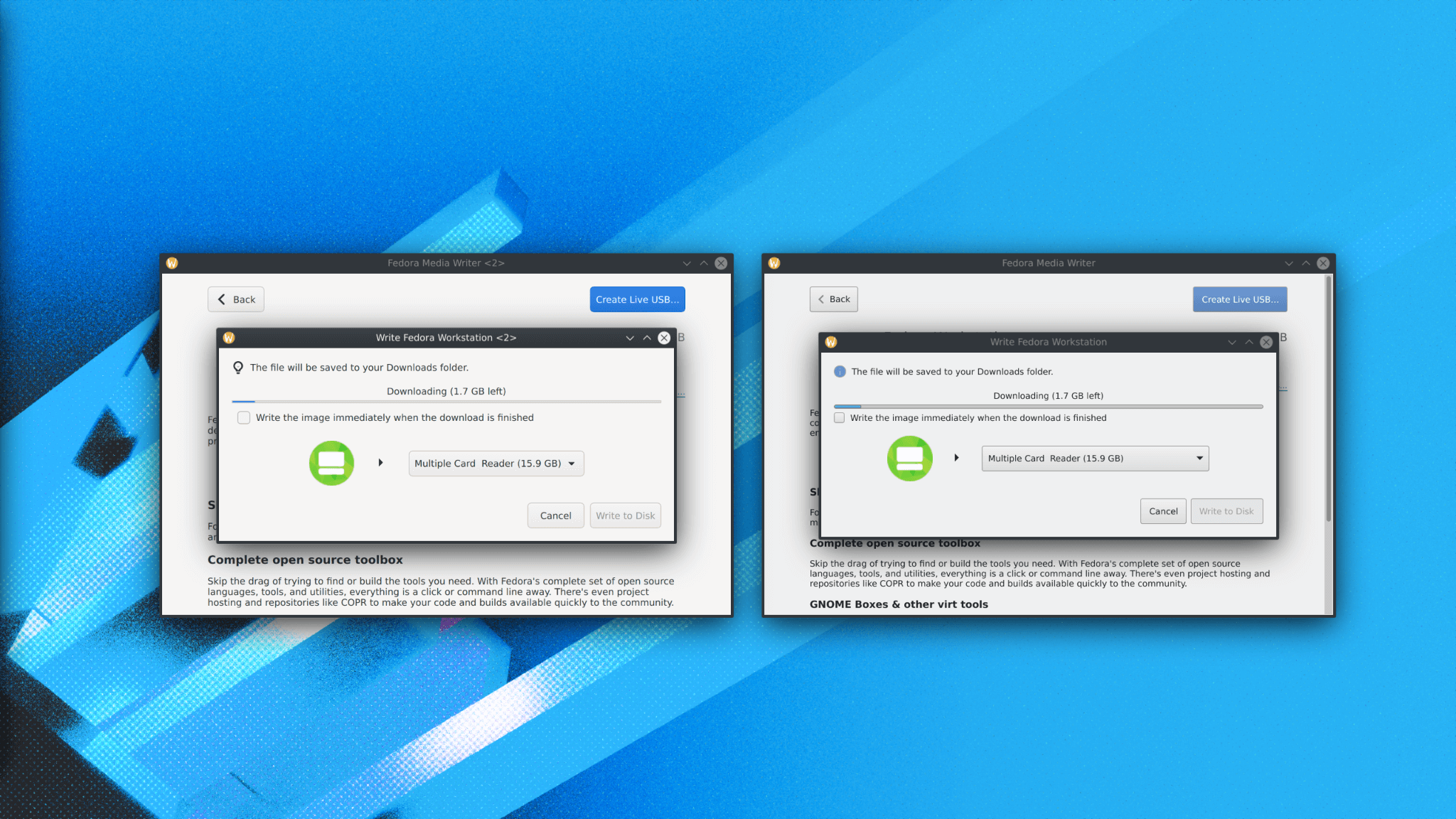

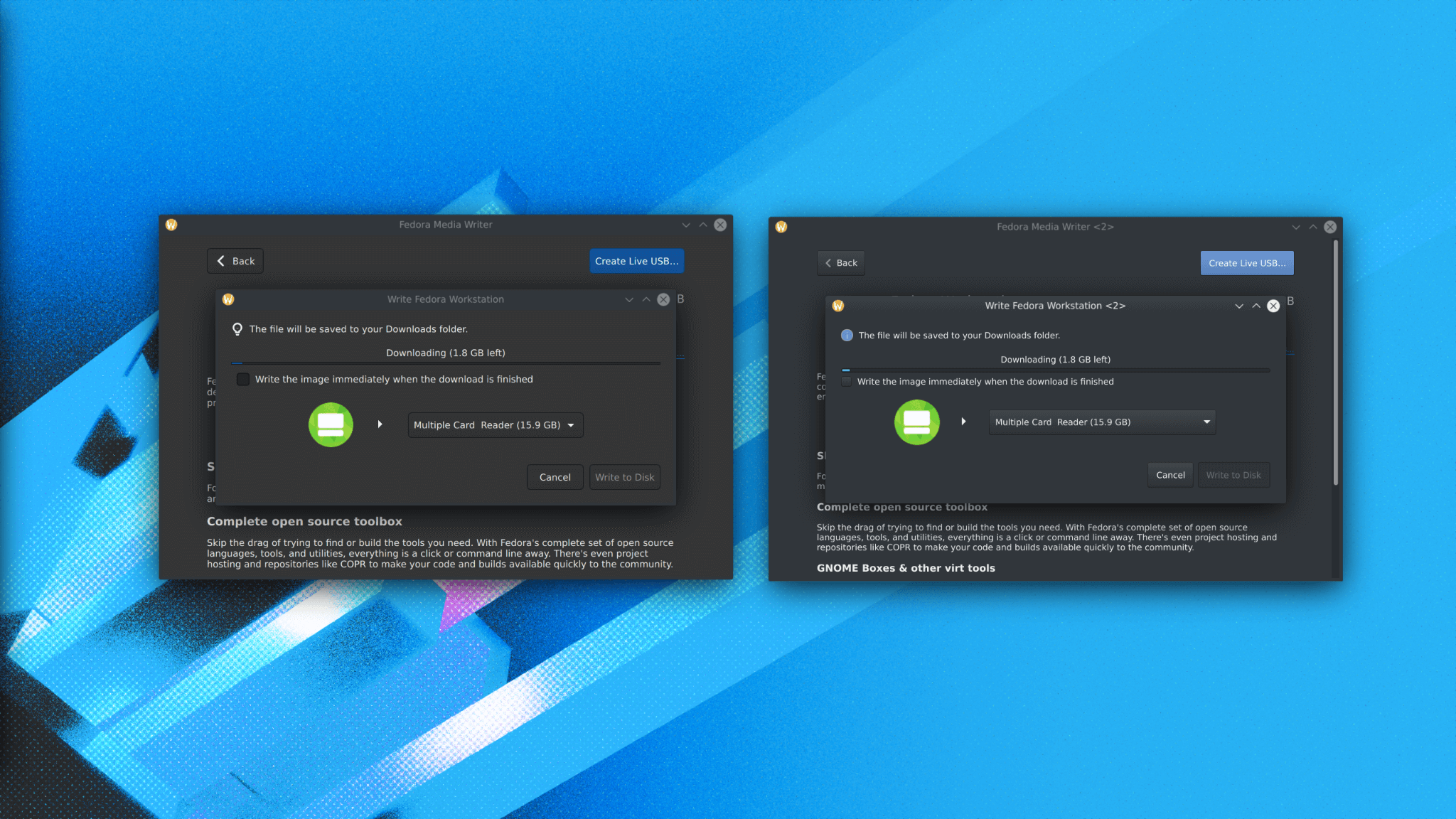

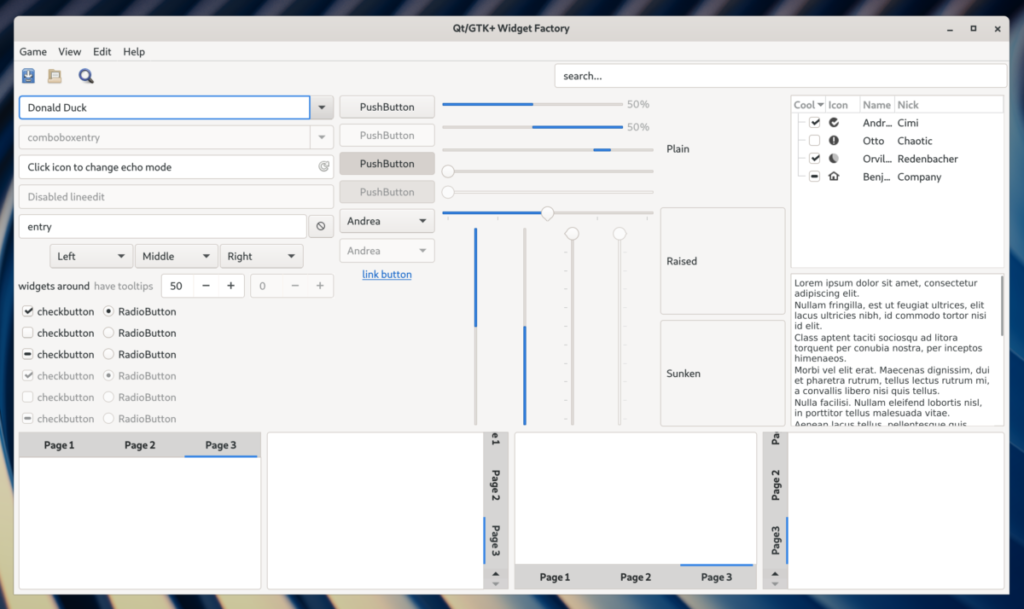

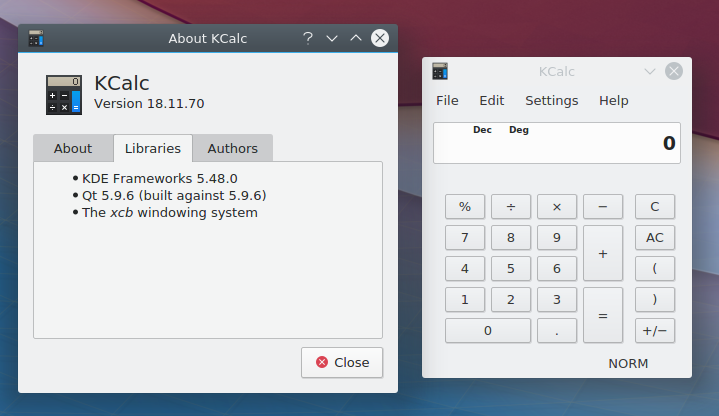

This is what the situation looks like. With each request to share a screen, you got the preview dialog from Chromium. This dialog consists from three pages. One is for screen sharing making one portal request, second one is for window sharing, which is another portal request, and the last one is just to allow you to share a web page you have opened. You had to confirm both portal dialogs, then confirm the Chromium dialog and finally you got one more portal dialog (ouch) to get the screen content on the web page itself.

I had a solution. I made all portal calls identified with an ID and shared this ID (portal call) in Chromium between both pages in the Chromium preview dialog and with the request made for the web page itself. With this solution we only had ONE portal dialog. This was a perfect solution (at least seemed to be). I started working on this at the beginning of this year, we exchanged many emails with people from Chromium UX team, because I wanted to do also some minor UI changes in the preview dialog. Unfortunately, those were rejected for consistency with all platforms. It was not a big deal and I submitted my changes for review, keeping UI as it was, just adding all necessary bits into Chromium and WebRTC to make it all work.

I wish to say things went smoothly since then, but the opposite is true. It took a while to get everything reviewed, but this is probably no surprise with this year being weird and many people working from home with less than ideal conditions. Anyway, few months passed away, I ended up rewriting my changes many times, not even counting hours I spent on it. This all resulted into me being obsessed with this change, it mattered to me so much to get it merged. I was constantly thinking about how to make it better, I was many times fixing issues in the evening (as reviewers were mostly US based), instead spending time with my family. It would be even better to waste my time with my beloved Playstation. This had really negative impact on my mental health and I realized this has to stop and I simply gave up, because I couldn’t continue this way and needed a break. I abandoned both changes (WebRTC and Chromium) and decided to just pick changes I will be able to successfuly upstream. I probably made my change too ambitious and complicated or maybe it’s just Chromium not being ready for this kind of change, because some tweaks were specific for my use-case. It’s also hard to say I wish upstream devs had helped me more, because there is so much to understand around Wayland, portals and PipeWire and way how it all works together.

Anyway, with a new start, without pressure after gaving up on the change, I picked the most important changes and submitted them separately. I was surprised now how smoothly this went and how fast those changes were upstreamed. Simply those changes were simple, understandable and easy to review. I didn’t gave up on fixing the “dialog hell” completely, I have some other ideas, but next time I will try to submit them step by step and will keep some distance and my free time.

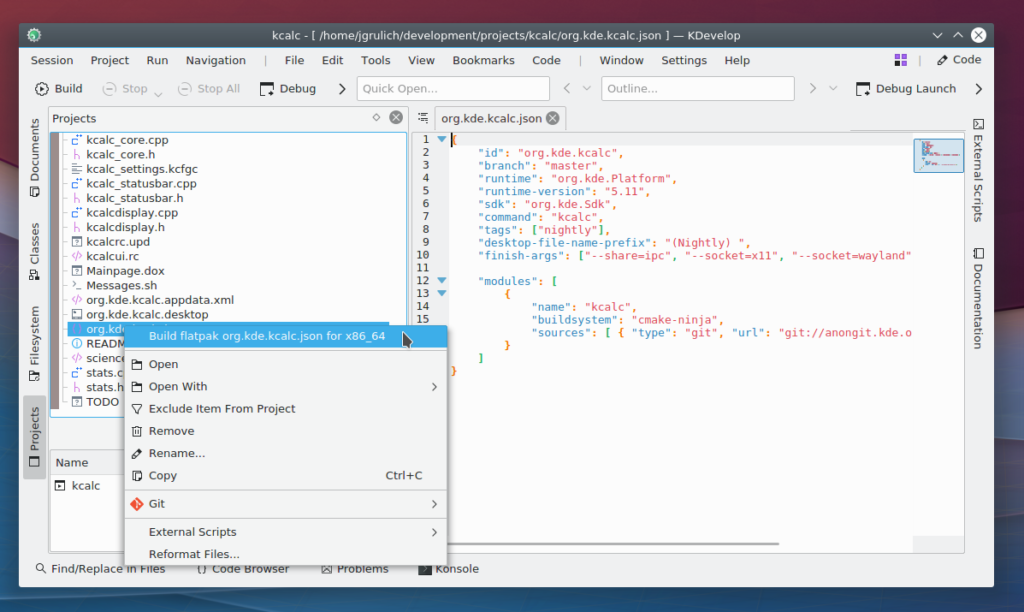

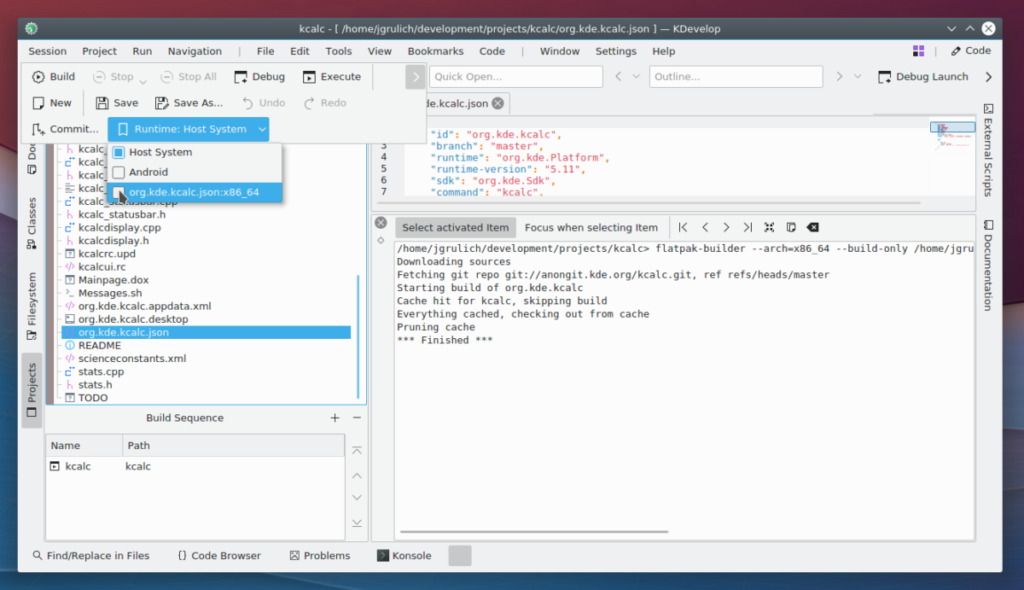

And what are the changes you can expect in upcoming Chromium release in 2021?

Support for PipeWire 0.3

You can now build Chromium/WebRTC with both PipeWire 0.2 and Pipewire 0.3. There is a new “rtc_pipewire_version” option you can pass to your build configs.

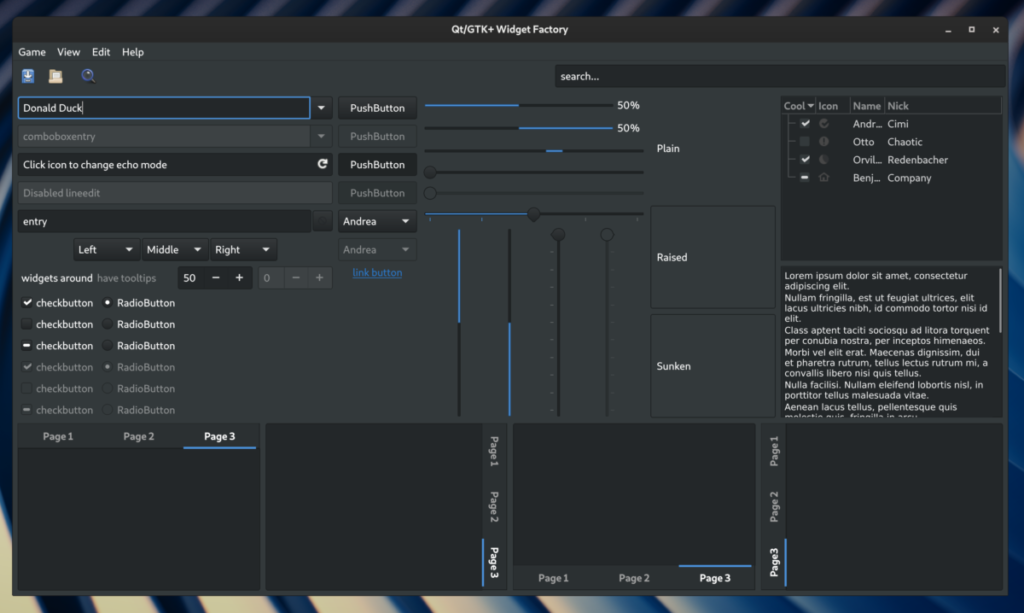

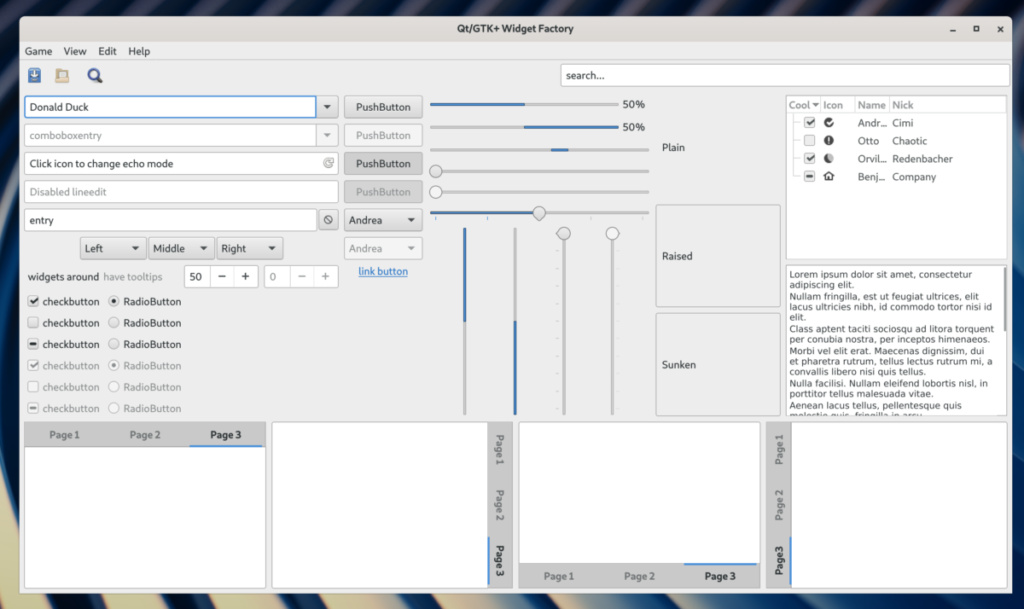

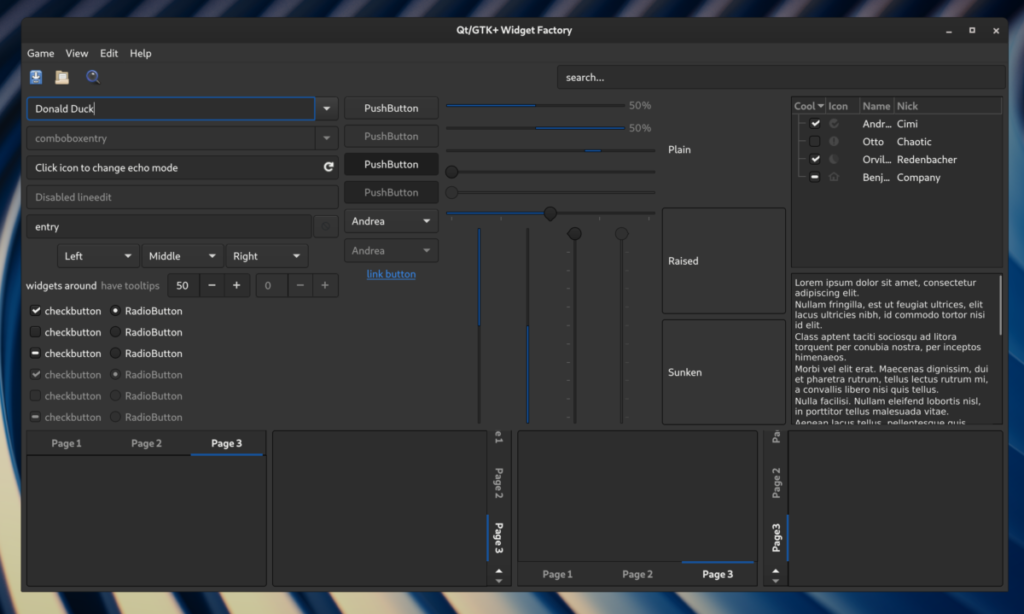

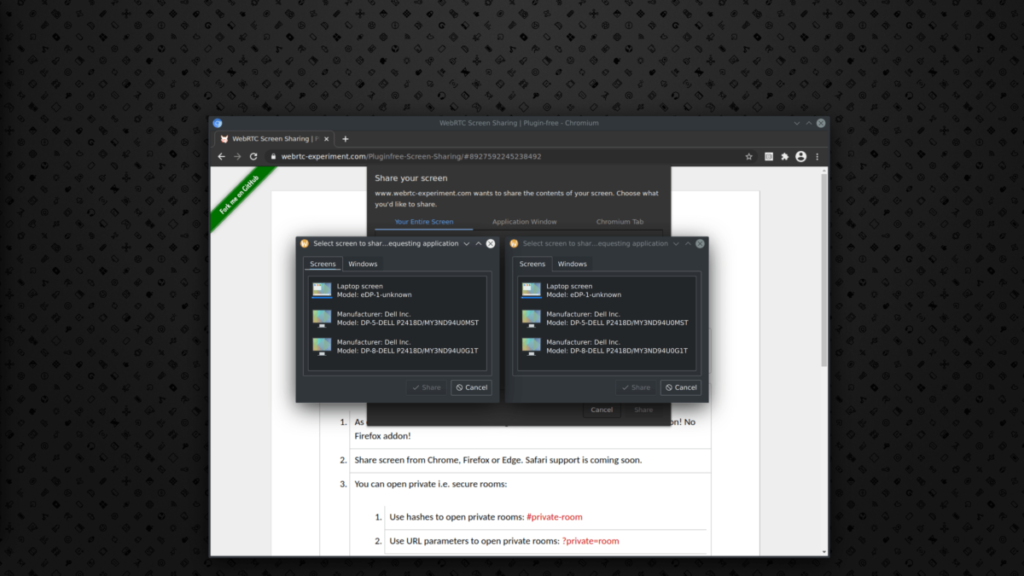

Window sharing support

There is probably no description needed. You will be able to share application windows in case you don’t want to share whole screen.

Suppport for DmaBuf and MemFd buffer types

This should allow faster transfer of your screen content from your Wayland compositor, through PipeWire to your browser.

Less portal dialogs involved

If you look back into the screenshot I posted above, you can see there are two portal dialogs opened just for the Chromium preview dialog. I at least tried to reduce this to just one portal dialog. This was done by removing the page for window sharing, because the screen share request will already handle both screen and windows.

I think you can expect above mentioned changes in Chromium 89 and I hope you will at least appreciate some of these improvements even though I didn’t deliver everything I wanted to. Also, thanks to Martin Stránský from our Firefox team, you can expect all these changes to be also part of Firefox.