If you are a regular reader of my blog posts, as I am sure you are, you will know that we made a switch with Fedora 41 and now use PipeWire as the default backend for camera handling in Firefox. It won’t come as a surprise that such a huge change is not without its problems. After talking to many of you and debugging the same issues over and over again, I would like to go through most of the common issues and show you how to fix them, and also shed some light on the whole stack.

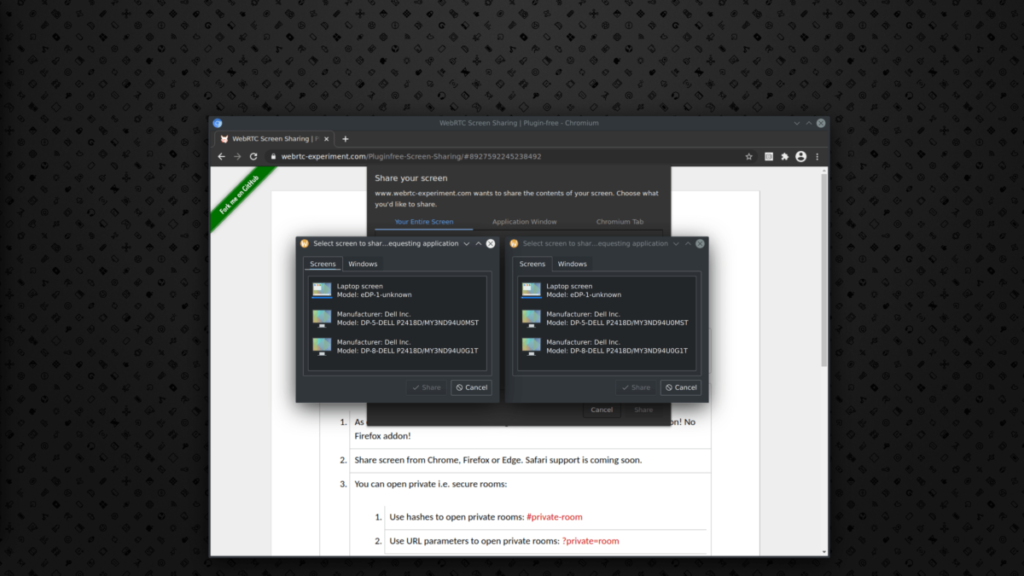

For Chrome/Chromium users out there, most of the issues mentioned here also apply to you, as most of the PipeWire camera code is shared in WebRTC, but I must mention that the PipeWire camera is completely broken in M131 and fixed in M132.

Issue #1 – Permission issue – “Camera is blocked” or “The request is not allowed” etc.

This is the most common problem and is usually caused by the user not giving access to the camera to an application that is requesting it. When Firefox wants to use a camera, it makes a request to the camera portal (xdg-desktop-portal). This results in a system dialogue asking for camera access for Firefox. If access is granted, it will be granted for all future sessions, but if access is denied or the dialogue is closed, it will remember this decision and all future requests to use the camera will be automatically denied.

You can check this by running:

$ flatpak permissions devices camera

Table Object App Permissions Data

devices camera yes 0x00

devices camera org.mozilla.firefox yes 0x00In the result you will see that “org.mozilla.firefox” has “yes” stored in the permission store. There is also an empty entry with “yes” stored. The empty entry is usually for applications for which we were unable to get an application id. This happens for host applications that are launched in an unusual way, such as the Alt + F2 command or from a terminal. If you have a permission problem, you will most likely see “no” stored there, and this is what is causing the problem for you.

You can clear this and be prompted again for camera access running this command:

flatpak permission-remove devices camera org.mozilla.firefoxYou may be wondering why flatpak is involved, since you don’t use flatpak applications. Flatpak is not really necessary, but I use its command line to work with the permission store and it is easier for me to just give you a command and you give me the result, otherwise you could also use Flatseal to check your camera permissions. The permission store comes from portals (xdg-desktop-portal), which we use to get access to your camera. While portals were originally intended to be used mainly by sandboxed applications (Flatpak, Snap), they are now also used for things like screen sharing (Wayland) and now the PipeWire camera, making them an essential part of the Linux desktop stack. Always make sure that xdg-desktop-portal is installed with a specific portal backend for your desktop, e.g. xdg-desktop-portal-gnome for GNOME or xdg-desktop-portal-kde for Plasma.

Issue #2 – No camera found

This can be a problem with many components. Let’s start with the most important one, which is finding out if Wireplumber (the session and policy manager for PipeWire) detects it.

You can run:

$ wpctl status

Video

├─ Devices:

│ 50. Integrated Camera [v4l2]

│ 62. Integrated Camera: Integrated C [libcamera]

│ 63. Integrated Camera: Integrated I [libcamera]

│ 69. Integrated Camera [v4l2]

│ 85. Integrated Camera [v4l2]

│ 93. Integrated Camera [v4l2]

│

├─ Sinks:

│

├─ Sources:

│ 76. Integrated Camera (V4L2)

│ * 80. Integrated Camera (V4L2)

│

├─ Filters:

│

└─ Streams:Here we are mainly interested in “Sources“, as this is what will appear in Firefox. Typically, most laptop cameras appear here twice, as one is an infrared camera for Windows Hello support, which we already filter out in Firefox. If your camera doesn’t appear there, it won’t work in Firefox or any other application that uses PipeWire.

If your camera is listed there but doesn’t appear in Firefox, I usually recommend that people try OBS Studio, which has great support for PipeWire cameras. This will always tell you if the problem is in Firefox or somewhere else. If it works in OBS Studio, you can open a bug to Firefox with all the necessary information (see below). If not, it is probably a bug in PipeWire.

We are already tracking one issue with the v4l2 plugin in PipeWire. This is most likely a race condition for which we have at least a workaround in the form of switching from v4l2 to libcamera.

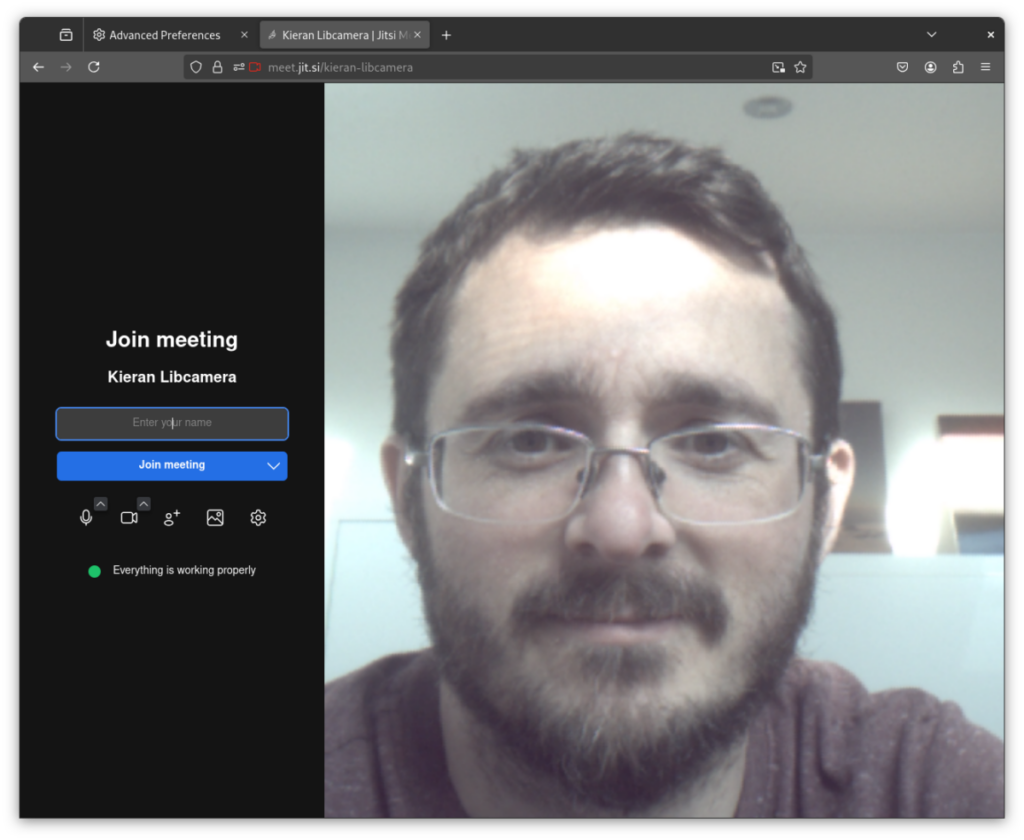

In order to use libcamera, you can create following file:

$HOME/.config/wireplumber/wireplumber.conf.d/99-libcamera.confWith the following content:

wireplumber.profiles = {

main = {

monitor.v4l2 = disabled

monitor.libcamera = optional

}

}And restart both Wireplumber and PipeWire:

systemctl --user restart pipewire wireplumberIf this doesn’t solve the problem for you, please follow the instructions below to report a bug to Firefox.

Another known problem, probably a rare one, is if you restart PipeWire while Firefox is still running. This is because we keep a connection to PipeWire and when you restart it, that connection is broken and not initialised again. This problem affects OBS Studio in the same way and I’m already working on a fix. The solution here is to restart Firefox.

Debugging and reporting issues to Firefox

You came here because none of the above worked? You can still report a bug with all the necessary information to help us identify the problem. First, you want to report a bug to Firefox upstream. You can do this here by selecting the “Core” product and the “WebRTC: Audio/Video” component and providing all the logs from below.

Include DBus communication with xdg-desktop-portal.

Open a terminal of your choice and run:

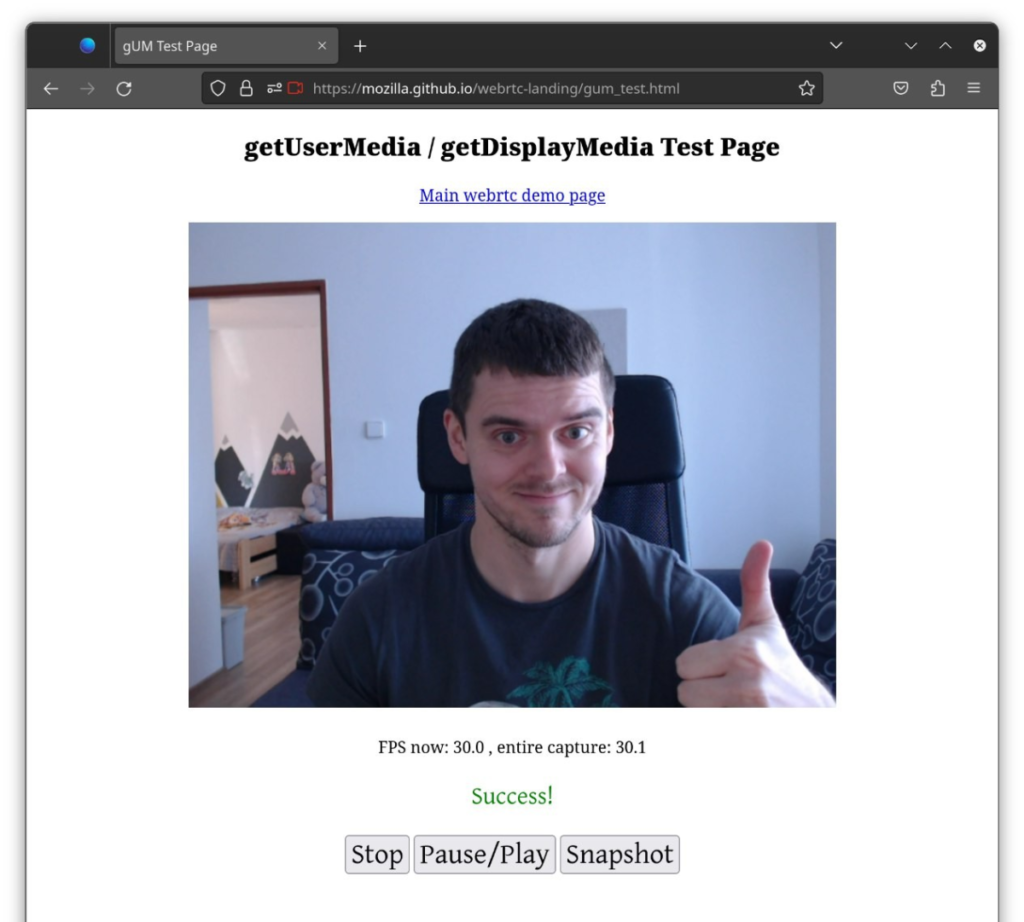

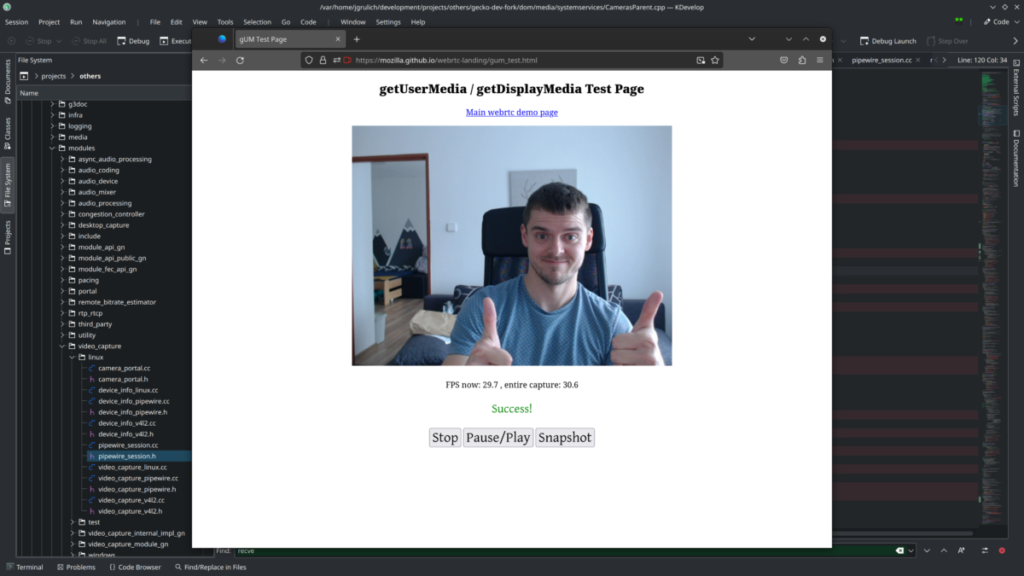

dbus-monitor --sessionKeep it running, while you try to access the camera in Firefox. For example, using the WebRTC getUserMedia test page. You should see all the DBus communication in the log from dbus-monitor.

Also a useful information might be to know whether the camera portal see any camera by running:

dbus-send --print-reply --dest=org.freedesktop.portal.Desktop /org/freedesktop/portal/desktop org.freedesktop.DBus.Properties.Get string:"org.freedesktop.portal.Camera" string:"IsCameraPresent"Including log from Firefox by running it with:

MOZ_LOG="MediaManager:5,CamerasParent:5,CamerasChild:5,VideoEngine:5,webrtc_trace:5"And a log from:

pw-monWhich will provide all advertised formats by your camera and supported formats by Firefox.

Last resort

Once you have done your duty and opened a bug with all the above information, which I’m really grateful for, you can now go to “about:config” in Firefox, disable “media.webrtc.camera.allow-pipewire” and restart Firefox. This will switch back from PipeWire to v4l2, but I hope you will accept this as a temporary solution until we can identify and fix your problem.

Debugging and reporting issues to Chromium

All the logs you can provide also apply to Chrome/Chromium, with the exception to logs from the app itself.

In order to get logs from Chrome/Chromium, you need to run it with:

google-chrome --enable-logging --vmodule=*/webrtc/*=1Once you have collected all the necessary logs, you can open a bug here for the “CameraCapture” component and add me to the bug (use grulja AT gmail.com) or let me know at least so I’m aware.

To switch back from PipeWire to v4l2 you have to go to “chrome://flags” and disable “PipeWire Camera support“, but you already know that since you had to enable it yourself before :).