I recently got an email from a user asking me how to make all this work on Fedora. Problem is that unlike in old XServer sessions, there are certain things which need to be enabled first. There are also dependencies which need to be installed and services which need to be running. While most of the dependencies are automatically installed and services automatically activated, there still might be situations when this is not true, for example when switching from another desktop so it’s better to cover it all. This tutorial targets Fedora, but it can be probably used by any other distribution.

Dependencies

Both screen sharing and remote desktop work almost identically on Wayland, they both use portals as a communication tool between applications and compositor (in this case Mutter) to start the process of sharing and setup PipeWire stream (see below). While portals were primarily meant to be used by sandboxed applications (e.g. Flatpak) to get access to system (like files or printing) outside the sandbox, their design perfectly fits for Wayland usage too. In Fedora you should have portals automatically installed, they are represented by two separate packages, first is xdg-desktop-portal, which is the portal service communicating with sandboxed applications and with a backend implementation of portals, and the second package is the backend implementation, in our case xdg-desktop-portal-gtk. Both are DBus activatable, which means they are automatically started whenever application calls them. The reason why portals consist from two services is that there can be multiple backends, each one providing native dialogs for your desktop. For example you don’t get a gtk dialog to open a file in KDE Plasma session or you want a backend communicating with specific compositor (like in our case with Mutter).

The second important dependency is PipeWire. PipeWire is the core technology used for screen content delivery from the compositor to applications. This is done throught a PipeWire stream shared between the compositor and application. PipeWire should be automatically installed on your system, the package name is pipewire and it provides socket-based activation so you shouldn’t need to worry if it’s running or not.

Enabling screen sharing and remote desktop in Gnome

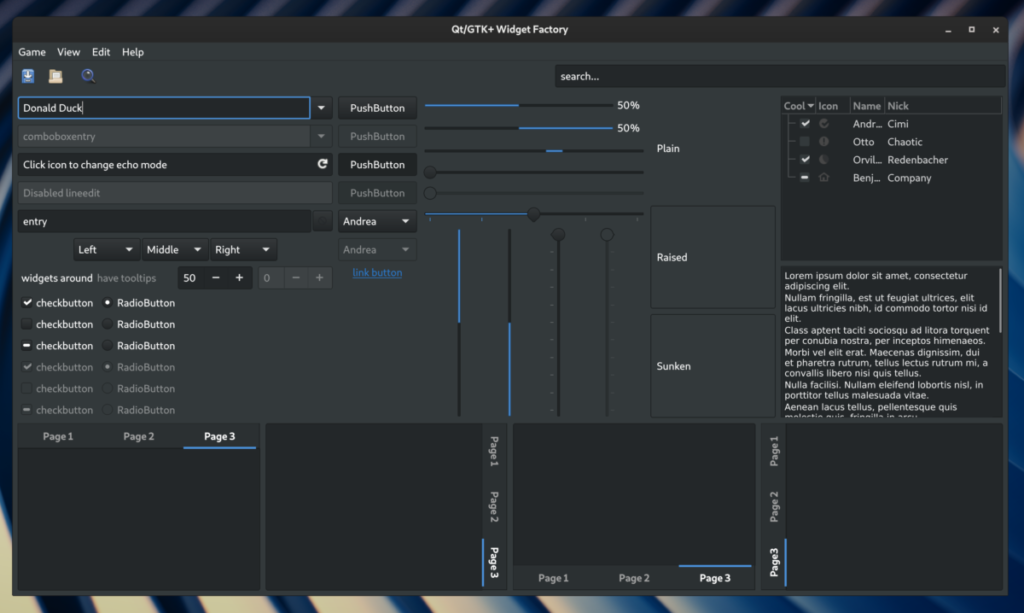

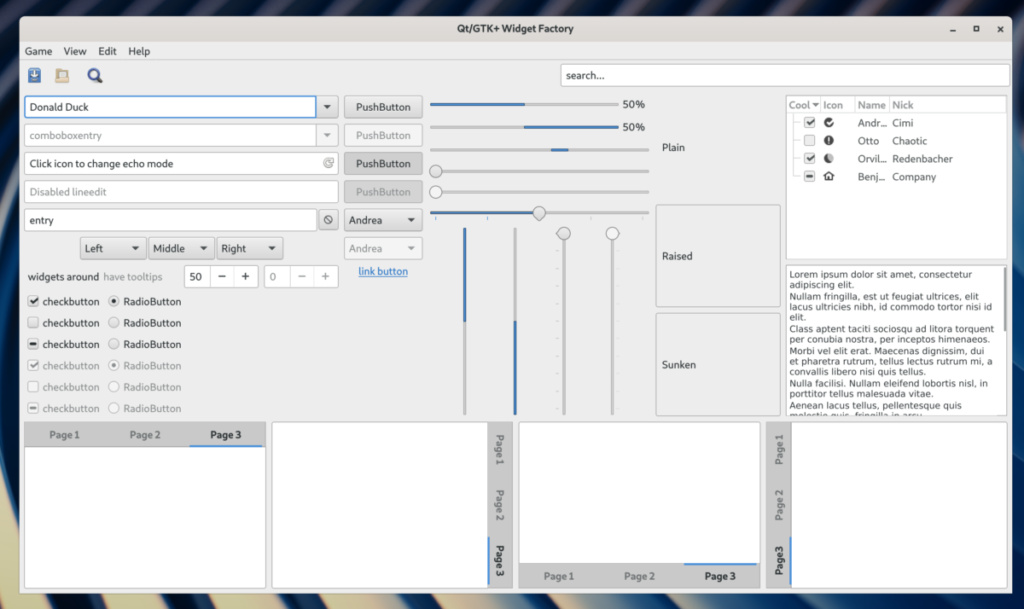

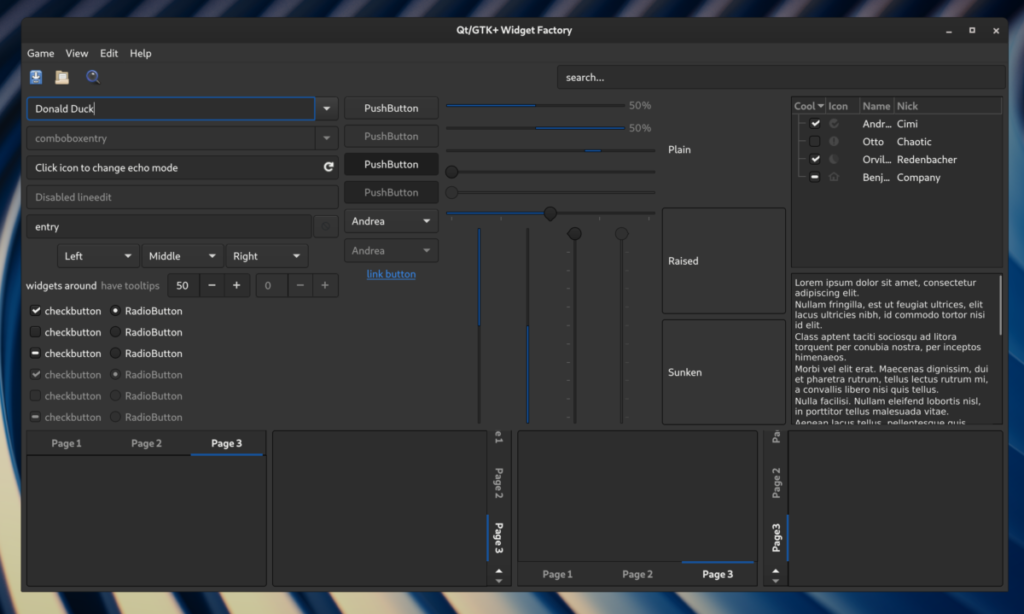

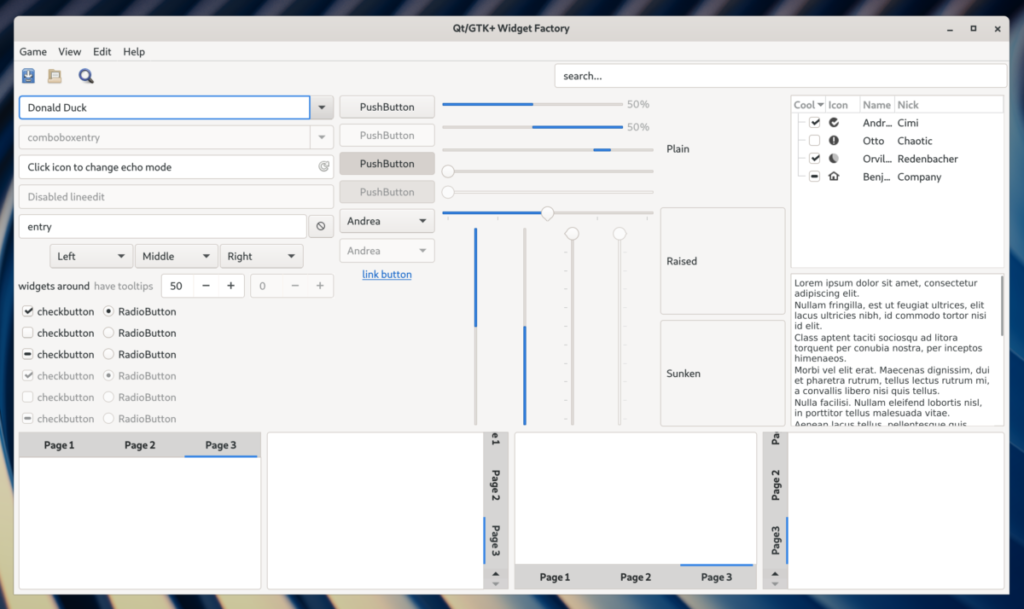

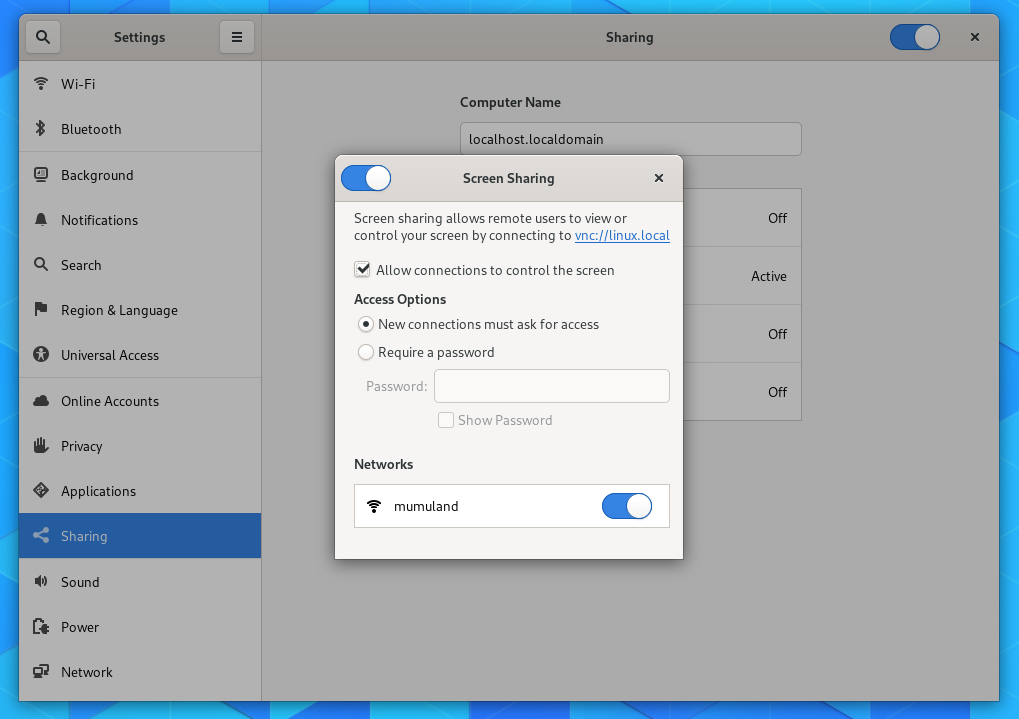

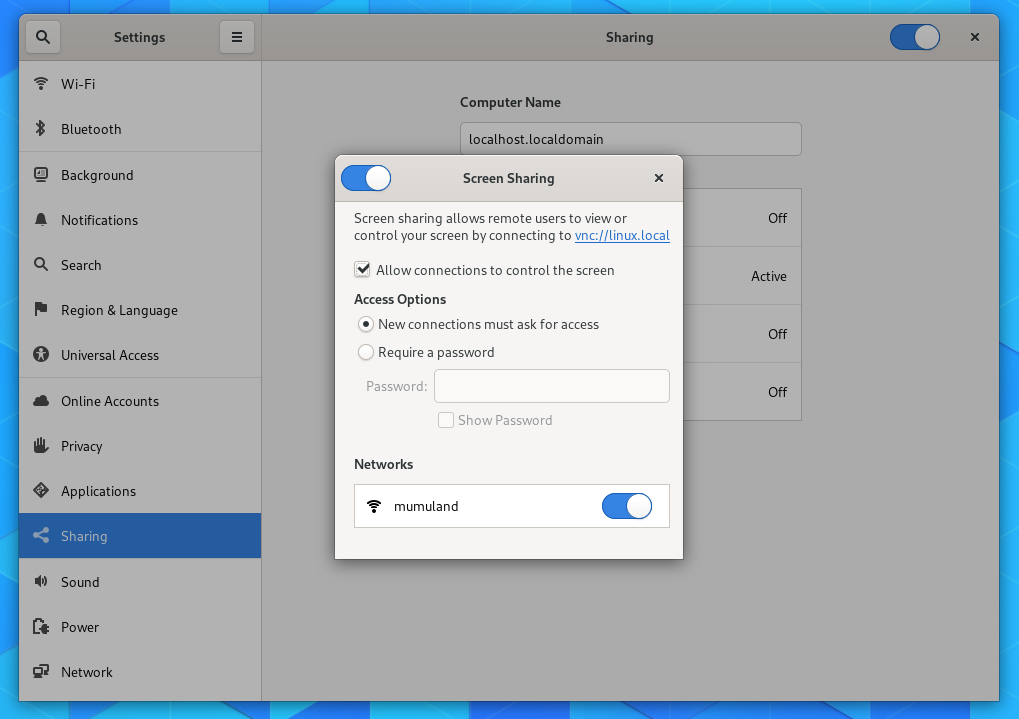

You don’t seem to do any additional step in order to make screen sharing work. However, you need to enable remote desktop (if you want to). Go and open gnome-control-center (Settings) and there go to Sharing section. There you should see this window when you click on Screen Sharing:

If you don’t have such option, make sure you have installed gnome-remote-desktop, because I’m not sure whether it’s installed by default. Allowing screen sharing will start a server instance which you can connect to from another computer, using vnc://linux.local or your_computer_ip:5900. You will most likely need to open a hole to your firewall, but the same you need to do for any other VNC server. I tried to connect with Vinagre and Krdc (KDE VNC viewer) and both worked for me, but I was unable to connect with Tigervnc (vncviewer) due to not maching security type.

Screen sharing in Firefox

Firefox in Fedora already comes with PipeWire support enabled and you don’t need to do anything special. You can test it for example with this testing page. The PipeWire support in Firefox unfortunately needs to be enabled during build by handmade changes, which is most likely not happening in other distributions, but from what I now there is an ongoing effort to make this configurable with a build option.

Screen sharing in Chromium/Chrome

Similar situation is with Chromium, where PipeWire needs to be also enabled during build, but it’s already configurable via a build option. In Fedora we have this enabled by default. Official Chrome builds are build with PipeWire support enabled as well. I should maybe mention that PipeWire support is in Chromium starting with version 74.

Unlike with Firefox, this support needs to be also enabled in runtime. You can do that with following chrome flag:

chrome://flags/#enable-webrtc-pipewire-capturer

Then you should be all set to be able to share a screen or a window from your Chromium or Chrome.

Issues

Don’t get scared by higher number of dialogs for screen/windows selection you will get when sharing screen in your browser. We are aware of this annoying usability issue and hopefully we will manage to solve it one day. The reason why this happens is that every browser provide their dialog for screen/window selection and in both browsers these dialogs show previews of your selection. You need to select screen/window first in the portal dialog for the preview dialog and once you accept the preview dialog in your browser, you again need to select screen/window in the portal dialog to get the content into the web page itself.

Support in other applications

There is a KDE application called Krfb, which in the next release (19.08) will have similar support for remote desktop on Wayland as you have in Gnome. Otherwise there are probably no other applications which would allow you to share a screen or control your desktop remotely on Gnome Wayland sessions. You will not be able to use TeamViewer, Tigervnc or any other application you are used to use. If you want to use these applications, you will have to switch to X session for now.